Introduction to philosophy tends to be a useless class. At its best, it tends to feel like a drier version of the stuff you argue about with your friends while high. At its worst, it feels like listening to high people argue while you’re sober. Neither one makes you feel like you’ve accomplished that much more than high talk.

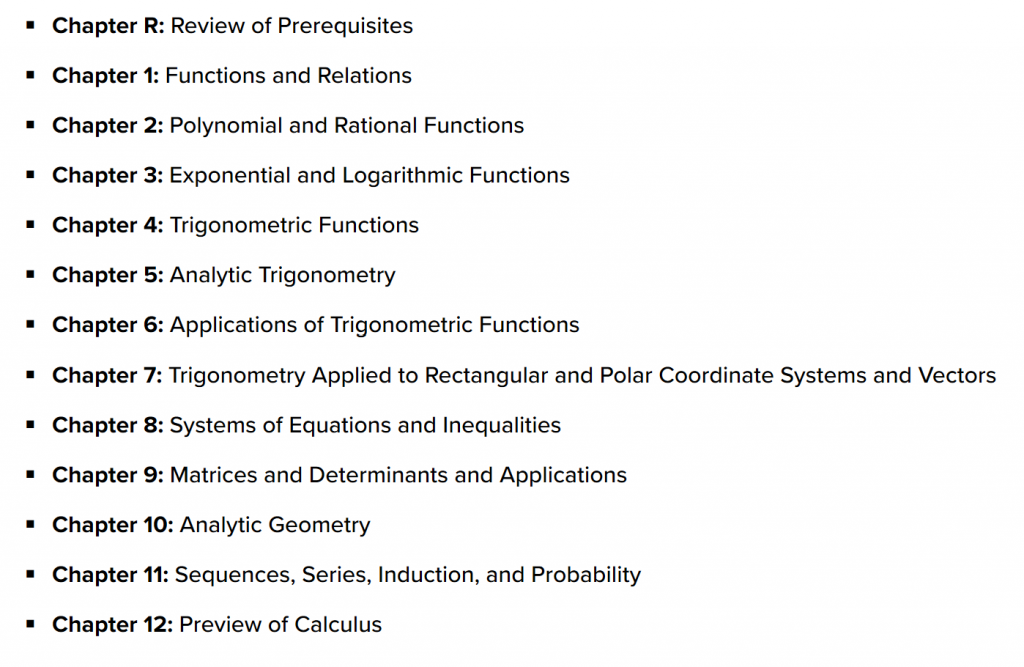

These problems are structural. It’s not just how the classes are taught, but what’s taught in the classes. For instance, take a look at syllabus to this Coursera course, which actually receives great reviews.

Syllabus to Introduction to Philosophy

- What is Philosophy?

- Morality: Objective, Relative or Emotive?

- What is Knowledge? And Do We Have Any?

- Do We Have an Obligation to Obey the Law?

- Should You Believe What You Hear?

- Minds, Brains and Computers

- Are Scientific Theories True?

- Do We Have Free Will and Does It Matter?

- Time Travel and Philosophy

Judging by the reviews (4.6 stars from 3,941 ratings!) , this is probably a fun class. But this class, without a doubt, is pretty useless.

How do I know that? Well, because literally zero of the questions are answered. It says so in the syllabus. For example, this is how they discuss “Morality: Objective, Relative or Emotive?”:

We all live with some sense of what is good or bad, some feelings about which ways of conducting ourselves are better or worse. But what is the status of these moral beliefs, senses, or feelings? Should we think of them as reflecting hard, objective facts about our world, of the sort that scientists could uncover and study? Or should we think of moral judgements as mere expressions of personal or cultural preferences? In this module we’ll survey some of the different options that are available when we’re thinking about these issues, and the problems and prospects for each.

It’s both sides-ism. It literally guarantees you that you won’t actually get an answer to the question. The best you can get is “options”. This both sides-ism is even worse for those questions that obviously have right answers. Yes, we have knowledge. Yes, we have an obligation to obey the law most of the time. Yes, most scientific theories are true.

Now, it’s possible to cleverly argue these topics using arcane definitions to make a surprisingly compelling case for the other side. That can be fun for a bit. But introduction to philosophy should be about providing clear answers, not confusing options.

Let me make my point with an analogy. Imagine going into an introductory astronomy course, knowing very little about astronomy besides common knowledge. The topic of the first lesson: “Does the Earth revolve around the sun?” The professor then would present compelling arguments for whether or not the Earth revolves around the sun, without concluding for either side.

If the class was taught well, a student’s takeaway might be something like, “There are very compelling arguments for both sides of whether or not the Earth revolves around the sun.” The student would probably still assume that the Earth revolved around the sun, but assume that knowledge was on a shaky foundation.

This would make for a bad astronomy class, which is why it’s not done. But this is done all the time in philosophy. In fact, most of the readers of this essay, if they only have a surface level impression of philosophy, probably assume philosophy is about continually arguing questions without ever coming to conclusions.

That’s not what philosophy is. At least, that’s not what most of it is. Philosophy is, in fact, the foundation in how to think and how to evaluate questions. Every single academic subject started or was heavily influenced by philosophy, and philosophy can still contribute to all of them.

Making philosophy feel useful again

Introduction to philosophy, properly taught, should be like teaching grammar. Students can think and analyze without philosophy, just like they can speak and write without knowing the formal rules of grammar. But philosophy should provide them with the rigorous framework to think more precisely and, in turn, analyze subjects and ideas in a way that they could not before. Philosophy should change the way a student thinks as irrevocably as knowing when exactly to use a comma changes the way a student writes.

In order for that to be the case, though, introduction to philosophy has to be a different class. It has to be a class with clear answers and clear takeaways, rather than a class with fun questions and arcane discussions. It has to be explicitly didactic: philosophy has things to teach, not just things to discuss.

If I were to design a philosophy course, that’s what I’d do. I’d make a course that assumed no background in philosophy, took a student through the most important, life-changing ideas in philosophy, and gave clear, actionable ways to change how a student should think and live. At the end of the course, I’d want a student to feel confident taking everything I taught out of the classroom to affect the rest of their lives.

And you know what? That’s exactly what I did when I made my own introduction to philosophy course.

Let me give some background. Although I’ve always loved self-studying philosophy, I only took two philosophy courses in college, and I got a B in one and a B+ in the other. The first featured a large, soft man who droned about theory of knowledge while covered in chalk dust. The second featured a hyperactive woman who attempted to engage the class in discussion about our ethical intuitions, while I attempted to engage my intuitions about Flash games (laptops are always a danger in boring classes).

After college, however, a happenstance meeting got me a job teaching a massive online philosophy course to a Chinese audience. This was a difficult job: I was teaching philosophy in these students’ second language, these students were paying several hundred dollars for the course, and they had no reason to be there besides their own interest (not even grades). The students could drop my class at any time and get a refund.

In fact, because I got realtime viewership numbers, I could literally see people drop my class anytime I ventured into a boring subject. It was terrifying and exhilarating. I lasted 1.5 years in this company (until they switched to Chinese language instruction only), taught around 5000 students total, and went through 3 complete overhauls of my syllabus.

By the time of my last overhaul, I had decided that the guiding principle of my class would be as I wrote above: a backbone to the rest of your intellectual life. Specifically, I had 3 sections to my course: how to think, how to live, and how things should work.

My own course: how to think, how to live, and how things should work

I chose those 3 sections because I felt like those were the most important things that philosophy had to offer, and what had the greatest impact on my own life.

“How to think” directly related to both the academic subjects that my students (most of whom were in college or right out of college) were familiar with, and the later sections of my course. As I described it to my students, the takeaways from “how to think” served as a toolbox.

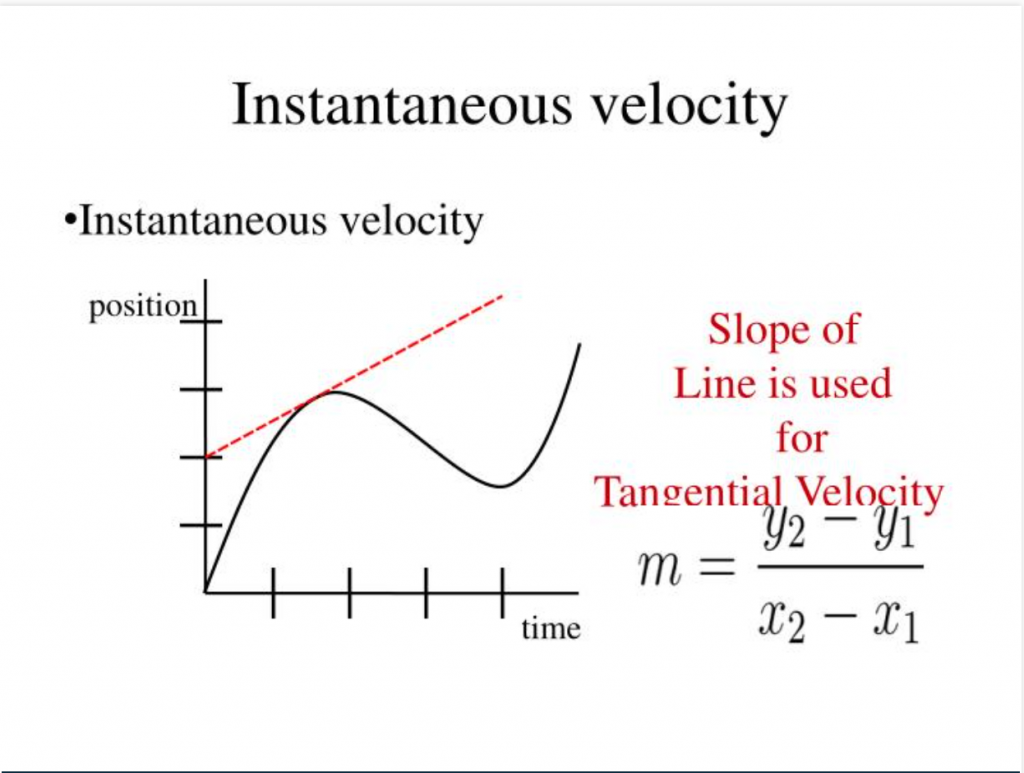

The power and the limitations of deduction, induction, and abduction apply to everything built on them, which basically encompasses all academic knowledge. It’s like starting a boxing class with how to throw a punch: a pretty reasonable place to start.

“How to live” was what it sounded like: how to live. I didn’t want to simply call it ethics, as I wanted to make it clear to my students that they should take these lessons out of the classroom. After I described the ethical philosophies to my students, we evaluated them both logically, using the tools from “how to think”, and emotionally, seeing if they resonated.

If the ethical philosophy was both logical and emotionally resonant, I told my students to be open to changing how they lived. All philosophy should be able to be taken out of the classroom, especially something so near to life as ethics. I’m just as horrified by a professor of ethics who goes home and behaves unethically as I would be by a professor of virology who goes home and write anti-vax screeds.

Finally, “how things should work” was my brief crash course in political philosophy. Political philosophy is a bit hard to teach because the parts that are actually practicable tend to be sequestered off into political science. It’s also hard to teach because, frankly, college students don’t have a lot of political pull anywhere, and China least of all.

So, instead, I taught my students political philosophy in the hopes that they could take it out of the classroom one day. As we discussed, even if their ultimate position is only that of a midlevel bureaucrat, they still will be able to effect change. In our final lesson, actually, we talked about the Holocaust in this respect. The big decisions came from the leaders, but the effectiveness of the extermination came down to the decisions of ordinary men.

Above all, I focused in each section on how what they learned could change their thinking, their lives, and their actions. To do so, I needed to focus on takeaways: what should my students be taking away from the body and life of Socrates, Wittgenstein, or Rawls? As an evidently mediocre philosophy student myself, I am all too aware that asking students to take away an entire lecture is frankly unreasonable. I mean, I taught the course and I have trouble remembering the entire lectures a few years later.

So, I focused on key phrases to repeat over and over again, philosophers boiled down to their essence. For Aristotle’s deduction: “it’s possible to use deduction to ‘understand’ everything, but your premises need to carefully vetted or your understanding will bear no relation to reality”. For Peirce’s pragmatism: “your definitions need to be testable or they need to be reframed to be so”. I ended each lecture by forcing my students to recall the takeaways, so that the takeaways would be the last thing they remembered as they left.

I also distributed mind maps, showing how each philosopher built on the next. The mind maps were distributed at the beginning as empty, then filled in with each subsequent lecture and its takeaways. Not only did this give students a greater understanding of how each philosopher fit into the course, but it gave them a clear sense of progress to see their map filled in.

Philosophy is one of humanity’s greatest achievements. The fact that it’s been relegated to just a collection of neat arguments in a classroom is a tragedy. We live in an age of faulty arguments, misleading news, and a seeming abandonment of even a pretense of ethics. Philosophy can change the way people see the world, if only it’s taught that way.

I’ve discussed the details of how I approached my class below, and also left a link for the course materials I developed. I haven’t touched the material in a couple years, but it’s my hope that others will be able to use it to develop similar philosophy courses.

A Google Drive link for the course materials I developed

The details of how I structured my course

How to think and takeaways

In “how to think”, we first discussed arguments and their limitations. Arguments are the language of philosophy (and really the language of academia). Being unable to form a philosophical argument and attempting philosophy is like attempting to study math without forming equations. You can appreciate it from the outside, but you won’t learn anything.

To introduce arguments, I used Socrates, of course. His arguments are fun and counterintuitive, but they also are very clear examples of the importance of analogy and definitions in philosophical arguments. Socratic arguments always started by careful definitions, and always proceeded by analogy. This same structure is omnipresent in philosophy today, and extends to other fields (e.g. legal studies) as well.

To discuss the limitations of this approach I brought in Charles Sanders Peirce’s pragmatic critique of philosophical definitions, and later Wittgenstein’s critique of “language games”, which can easily be extended to analogies. As should probably be clear by my bringing in 19th and 20th century philosophers, I wasn’t aiming to give students a chronological understanding of how argumentation developed in philosophy. I was aiming to give them a tool (argumentation), and show them its uses and limitations.

From there I went onto how to understand new things through deduction and induction. It is easy for philosophy courses, at this point, to discuss deduction, discuss induction, and then discuss why each is unreliable. This leaves the student with the following takeaway: there are only two ways to know anything, and both are wrong. Therefore, it’s impossible to know anything. Given that this is obviously not the case, philosophy is dumb.

I really, really wanted to avoid that sort of takeaway. Instead, I again wanted to give students a sense of deduction and induction as tools with limitations. So I started off students with Aristotle and Bacon as two believers in the absolute power of deduction and induction, respectively. I took care to make sure students knew that there were flaws in what they believed, but I also wanted students to respect what they were trying to do

For deduction, I then proceeded to use Cartesian skepticism to show the limitations of deduction, and then Kantian skepticism to show the limitations of deduction even beyond that. This reinforced the lesson I taught with Socratic arguments: deduction is powerful, but the premises are incredibly important. Aristotle never went far enough with questioning his premises, which is why so much of his reasoning was ultimately faulty.

Discussing the limits of induction was more interesting. From the Bacon lesson, my students understood that induction was omnipresent in scientific and everyday reasoning. It obviously works. So, Hume’s critique of induction is all the more surprising for its seeming imperviousness. Finally, bringing in Popper to help resolve some of those tensions was a natural conclusion to how to think.

At the end of this section (which took 10 classes, 2 hours each), my students had learned fundamentals of philosophical reasoning and its limitations. My students were prepared to start to apply this reasoning in their own academic and personal lives. They were also prepared to think critically about our next sections, how to live and how things should work.

They did not come away thinking that there were no answers in philosophy. They didn’t inherently distrust all philosophical answers, or think that philosophy was useless. It’s possible to understand the flaws in something without thinking it needs to be thrown out altogether. That was the line I attempted to walk.

How to live and takeaways

Once I finished teaching my students how to think philosophically, I embarked on telling my students how philosophers thought they should live. My theme for this segment was a quote I repeated throughout, from Rilke, “For here there is no place that does not see you. You must change your life.”

In other words, I wanted to introduce my students to philosophy that, if they accepted it, would change the way they chose to live their lives. Ethical philosophy now is often treated like the rest of philosophy, something to argue about but not something to change yourself over. In fact, surveys show that professors of ethics are no more ethical than the average person.

This is a damn shame. It doesn’t have to be this way, and it wasn’t always this way. In fact, it’s not even this way for philosophy outside of the academy today. I’ve personally been very affected by my study of existentialism and utilitarianism, and I know stoicism has been very impactful for many of my contemporaries.

That’s the experience I wanted for my students. I wanted them to engage critically with some major ethical philosophies. If they agreed with those ethical philosophies, I wanted them to be open to changing the way they acted. In fact, I specifically asked them to do so.

The ethical philosophers I covered were the ones that I felt were most interesting to engage with, and the most impactful to me and to thinkers I’ve respected.

First, I covered Stoicism. I asked my students to consider the somewhat questionable philosophical basis for it (seriously, it’s really weird if you look it up), but also consider the incredibly inspiring rhetoric for it. If Hume is right, and thoughts come from what you feel and are only justified by logic, then the prospect of controlling emotions is incredibly appealing. Even if he isn’t, any philosophy that can inspire both slaves and emperors to try to master themselves is worth knowing. Plus, the chance to quote Epictetus is hard to pass up.

I then covered Kant, as an almost polar opposite to Stoicism. Kant’s categorical imperative ethics is well reasoned and dry. You can reason through it logically and it’s interesting to argue about, but it’s about as far away from inspiring as you can get. Even the core idea: “Act only according to that maxim whereby you can, at the same time, will that it should become a universal law,” is uninspiring, and it’s very hard to imagine acting as if everything you did was something everyone else should do. As I asked my students, how do you decide who gets the crab legs at a buffet? But my students needed to decide for themselves: did they want to follow a logical system of ethics, or an inspiring one?

We then covered utilitarianism. This, as I’ve mentioned is something I’m biased towards. My study of utilitarianism has changed my life. I donate far more to charity than most anyone I know because of their arguments: keeping a hundred dollars per month more or less for me does not affect my life in the slightest, but it can make an incredible impact on someone less fortunate.

I presented two sides to utilitarianism: the reasoned, calm utilitarianism of Bentham, and the radical demands of Peter Singer. For Bentham, I asked my students to consider how they might consider utilitarianism in their institutions: can they really say if their institutions maximize pleasure and minimize pain? For Singer, I asked my students to consider utilitarianism in their lives: why didn’t they donate more to charity?

What I wanted my students to think about, more than anything, was how and if they should change the way they live. Ethical philosophy, as it was taught at Princeton, was largely designed to be left in the classroom (with the noted exception of Peter Singer’s class). Ethical philosophers today, having been steeped in this culture, likewise leave their ethics in their offices when they go home for the night. To me, that’s as silly as a physics professor going home and practicing astrology: if it’s not worth taking out of the classroom, it’s not worth putting into the classroom.

Finally, we covered existentialism.I knew my students, being in their late teens and early twenties, would fall in love with the existentialists. It’s hard not to. At that age, questions like the purpose of life and how to find a meaningful job start to resonate as students start running into those issues in their own life. The existentialists were the only ones to meaningfully grapple with this.

My students came into this section with the tools to understand ethical philosophies. They came out of it with ideas that could alter the course of their lives, if they let them. That, to my mind, is what philosophy should be. Of course, we weren’t done yet. Now that they had frameworks to think about how their lives should be, I wanted them to think about how institutions should be.

How things should work and takeaways

Teaching political philosophy to Chinese students is interesting and complicated, but not necessarily for the reasons you’d expect. I wanted to teach my students the foundations of liberalism, and, when I first taught the coure, I naively thought that I’d be starting from ground zero. I wasn’t. In fact, as I was informed, Chinese students go over Locke in high school, and often John Stuart Mill in college. They’re just thoroughly encouraged to keep that in the classroom.

So, my task wasn’t to introduce students to the foundations of liberalism. My task turned out to be the same as the impetus for this course: to make political philosophy relevant.

This is actually tough. Almost all political philosophy is, frankly, so abstract to be useless. While I was teaching the course, for instance, Donald Trump was rapidly ascending to the Presidency (and became President right before one of my classes, actually). Locke didn’t have a ton to say about that sort of thing.

But, instead of avoiding the tension in my attempt to make political philosophy relevant, I tried my best to exploit it. I roughly divided up the section into practical political philosophy and idealistic political philosophy. Plato and Locke were idealists, Machiavelli, the Federalists, and Hannah Arendt were practical.

When I discussed Plato and Locke, I wanted to discuss their ideas while making it clear they had zero idea how to bring them about. Plato, for his Republic, needed to lie to everyone about a massive eugenics policy. Locke, for his liberal ideals, came up with an idea of property that was guaranteed to please nobody with property. They’re nice ideas (ish), but their most profound impacts are just how people have used them as post-hoc justifications for existing ideals (i.e. the Americans with Locke’s natural rights).

I wanted my students to understand how Machiavelli exploited the nitty gritty of how ruling actually worked, and did so with a ton of examples (inductive reasoning). Even in his “idealistic” work, Discourses on Livy, he wrote his ideals with a detailed knowledge of what did and did not work in the kingdoms of his time.

For the Federalists, I discussed similarly how much more involved they were with property. The Federalists listed Locke as an influence, but they actually had to build a country. They wrote pages upon pages of justifications and details about taxes, because they knew the practicalities of “giving up a little property to secure the rest of it” often led to bloodshed.

Finally, I ended the section with a discussion of Hannah Arendt’s banality of evil. In a time of increasing authoritarianism around the world, I wanted my students to be aware of the parts of political philosophy that would immediately impact them. They were likely not to be rulers or princes, but they were likely to be asked to participate in an evil system if they entered politics (especially in the China of today). I wanted them to be acutely aware of the bureaucracy that made evil governments possible, and the mindset that could stop them.

My political philosophy section ended with the takeaways that politics can be analyzed with the same thinking tools as the rest of philosophy, and weighed with the same ethics of how to live. The minutiae are complicated, but it is not a new world.

Final takeaways

In the end, the fact that nothing is a new world were my intended takeaways from the entire course. Philosophy underpins everything. It is the grammar of thinking. Scientific experiments, legalistic arguments, detailed historical narratives: all of these methods of making sense of the world have their roots in philosophy and can be analyzed philosophically.

And, if everything can be analyzed philosophically, then you might as well start with your life and the society you live in. It’s not enough to just analyze, though. Philosophy should not be something to bring out in the classroom and then put away when you come home. If the way you live your life is philosophically wanting, change it. If your society is on the wrong course, fix it, even if you can only fix it a little.

There’s nothing worse in education than lessons that have no impact on the student. Likewise, there is no higher ideal for education than to permanently change the way a student evaluates the world. The classroom should not simply be a place of empty rhetoric or even entertainment. To paraphrase Rilke, “For there there should be no place that does not see you. You must change your life.”

[Once again, Google Drive link for all my course materials].